Using Slim CI to scale dbt development: A how-to guide

What is Slim CI?

The phrase “Slim CI” refers to a development pattern that enables the minimum amount of work needed to validate a set of proposed changes to your data pipelines.

When working with a large dbt codebase, running all of your dbt models to test every change can be problematic for several reasons:

Rebuilding the full tree of models can take a long time

Data gets duplicated many times across all of your test and staging schemas

Test environments become stale as production evolves out from under you

Slim CI works by combining two dbt techniques:

Using the dbt

--selectflag to limit your test runs to only build the models that have proposed changesUsing the dbt

--deferflag to fall back to an existing table for any models whose definitions have not been changed

Let’s examine each feature one by one, and then see how they combine to create a Slim CI workflow.

Partial dbt runs using --select

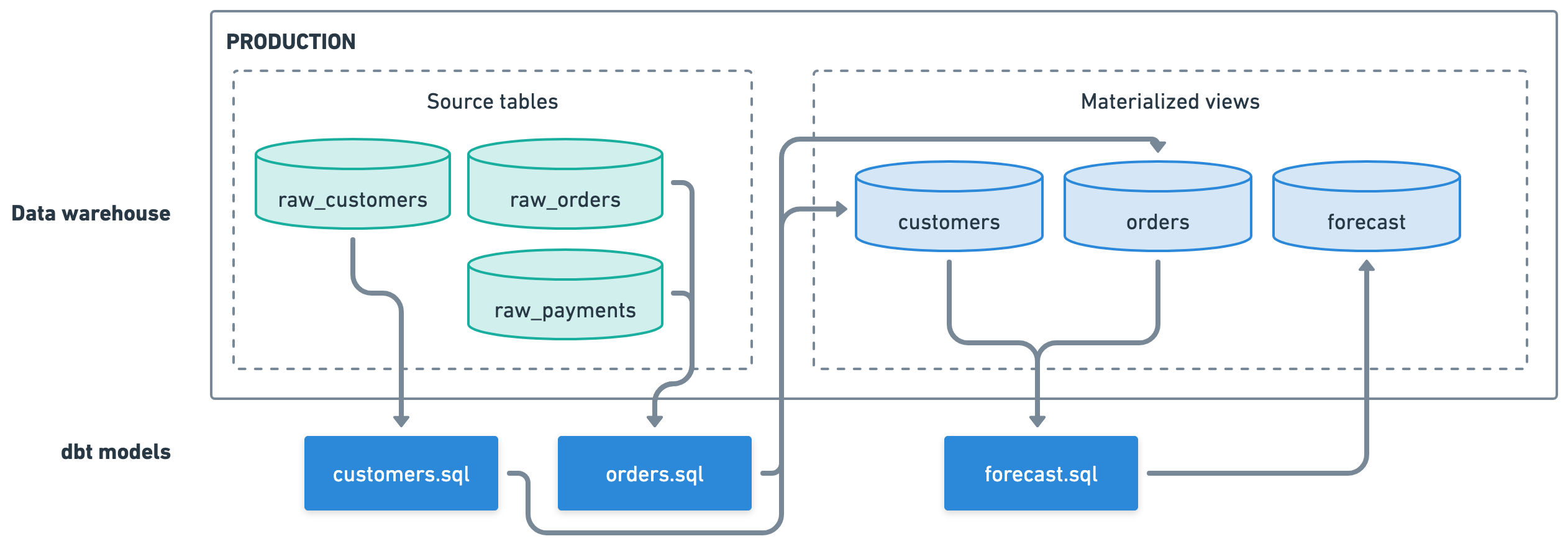

Here's a simple example dbt project:

We have three source tables in the data warehouse,

We have three source tables in the data warehouse, raw_customers, raw_orders, and raw_payments. And we have three dbt models: customers and orders gets our data ready for reporting and materializes them into new tables, and forecast reads from our reporting data, applies some business logic, and then persists out the output.

The first time we run this dbt project, the command will be simply:

dbt run --target=production…which will create our materialized views and output various metadata files into the target/ directory of our dbt project.

Let's say you are developing new logic in customers.sql and need to run this model repeatedly while iterating. By default, each time you call dbt run, this entire set of tables is going to be re-built. If raw_orders is a very big table, this could be expensive — both in terms of dollars spent querying your database, and development time while you wait for each run to finish.

To work around this, we can use the --select flag to instruct dbt to only run models whose SQL definitions have changed, as well as any models downstream whose results would also change. The commands will look like this:

# Save the artifacts from the previous run

cp -r target base_production_target

# Make some change to customers.sql

# Run only changed (and downstream) models, compared to the previous run

dbt run --target=production --select state:modified+ --state=base_production_target/This will re-generate the customers and forecast views, without re-building our large orders table.

.png) If you’re using dbt Cloud instead of dbt Core, your previous artifacts are stored for you automatically, so there’s no need to maintain a

If you’re using dbt Cloud instead of dbt Core, your previous artifacts are stored for you automatically, so there’s no need to maintain a prev_target directory.

Querying across environments using --defer

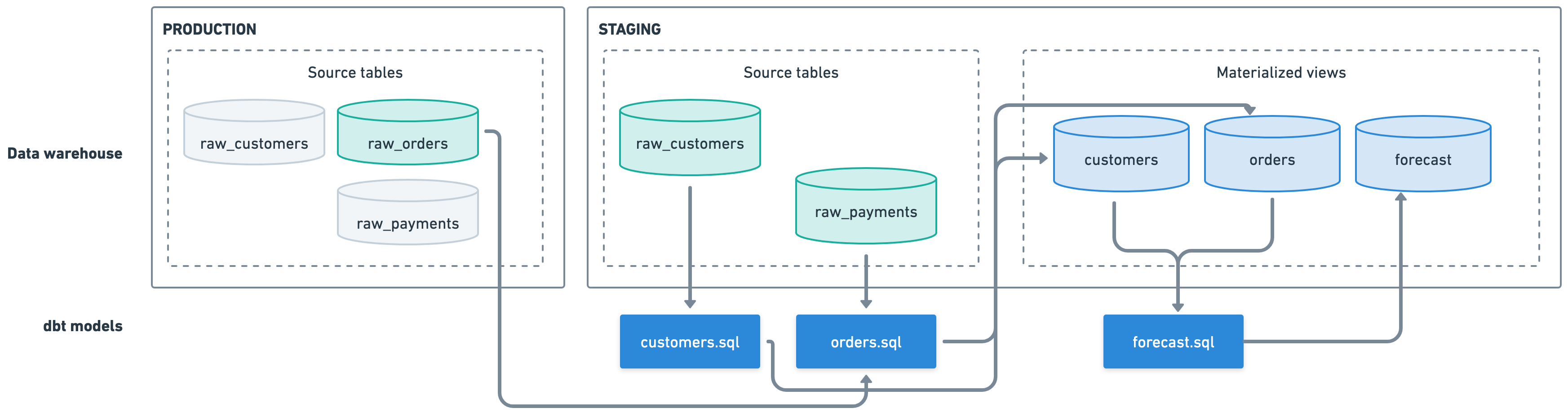

It is a good idea to validate data changes before applying them to your production environment, so usually you’ll have a separate schema where you’ll do your testing. In our example we’ll call it staging.

Because the raw_orders table is so big, we’d prefer not to copy all of it into our staging schema. But, we’d still like to use real order data when testing our models.

Enter the --defer option. When you use this flag, dbt will fall back to reading from a table in a different target if it doesn’t already exist in your current target. Let’s see it in action:

# Run all my models against the staging schema, but fall back to production

# if an upstream source or model doesn't exist

dbt run --target=staging --defer --state=base_production_target/With this, dbt will detect that raw_orders doesn’t exist in the staging schema, and so it will read from the raw_orders table in our production schema, as used in our original production run.

If using dbt Cloud, you don’t need to manually provide the base state — it will be stored and used for your runs automatically.

If using dbt Cloud, you don’t need to manually provide the base state — it will be stored and used for your runs automatically.

Putting it all together to get Slim CI

Combining --select and --defer drastically reduces both the time and cost of iterating on dbt model changes.

Here will be our workflow:

Our

productiontarget will be generated by a complete run of our dbt project (usually as part of a continuous integration system). We’ll save the state directory of this run so that we can reference it later.Developers will all have their own individual dev schema, so that they can test their changes independently.

When testing a change, developers will do a partial, deferred build with this command, replacing

my_devwith their own development target:

dbt run --target=my_dev --select state:modified+ --defer --state=base_production_target/This workflow allows developers to modify only specific dbt models while testing the entire data pipeline end-to-end using your real data. For instance, if you’ve made changes to only the customer.sql and forecast.sql models and run dbt with the above flags, the execution will look like this:

.png) For a project with hundreds or even thousands of dbt models, the time and money savings from implementing this workflow can be very large!

For a project with hundreds or even thousands of dbt models, the time and money savings from implementing this workflow can be very large!

Taking the next step

This post showed you how to start using a Slim CI pattern to speed up your dbt development workflow.

Beyond the basics, you can extend this pattern in several effective ways. dbt has powerful selection syntax for customizing the scope of your runs. And Slim CI makes it scalable to run and test dbt for every pull request in your repo as part of a broader continuous integration strategy, which we highly recommend. You can even layer BI previews from Hashboard on top of Slim CI builds, which allows reviewers to fully validate your end-to-end data workflows in a lightweight way.