Like many software engineers, I’m very excited about how LLMs are transforming knowledge work. Just a year after the first release of ChatGPT, an AI assistant is now a core part of my daily workflow.

Generative AI has been particularly buzzy in the data space. The idea that you can ask a question about your business in natural language and get a correct, rich, insightful answer is the holy grail of data science, and it’s starting to feel like it might actually be within reach.

Our core mission at Hashboard is to make data accessible and useful for everyone, no matter your background. So like many data startups, we couldn’t resist building an LLM integration into our product.

Developing an AI Assistant for BI

The architecture of our first AI feature, called the “AI Assistant”, was relatively simple. We’ve already built a yml-based API for configuring charts and dashboards in Hashboard. So the idea was to describe this API in the prompt of an LLM model, share context about the data that the user is currently exploring (the available columns, filters, etc) and then append a user’s prompt. We’d ask the LLM to generate a chart that answers the user’s question.

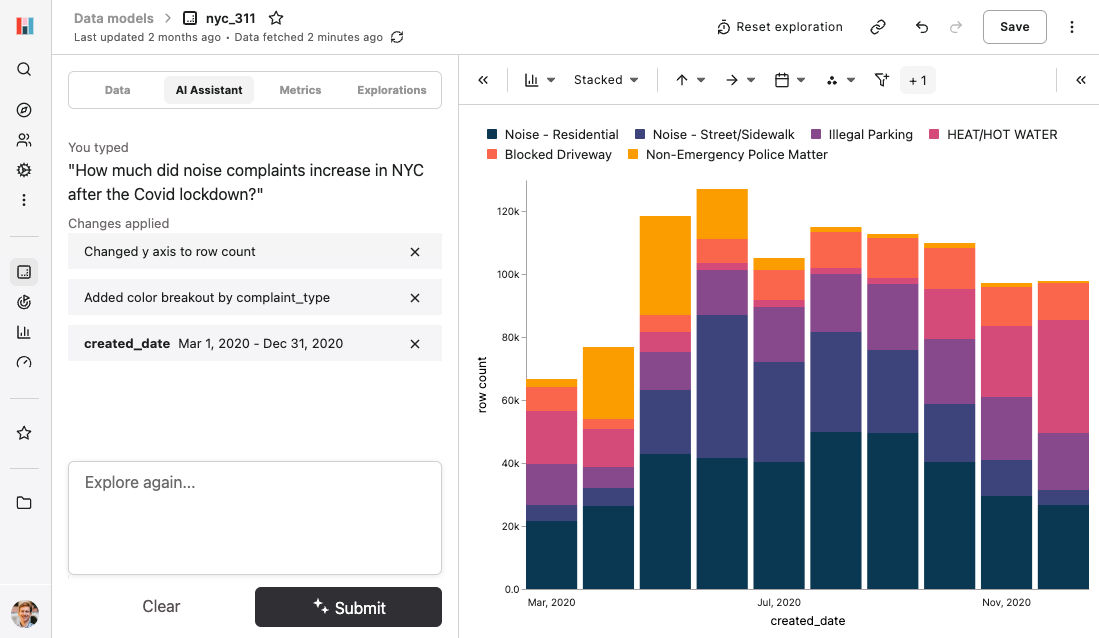

.png) We had an initial prototype working in just a few days. (It’s mindblowing how quickly these kinds of features can be built!) And our early demos were magical: we could pull up our favorite sample dataset, NYC 311 complaint calls, and ask natural language questions like “How much did noise complaints increase in NYC after the Covid lockdown?”

We had an initial prototype working in just a few days. (It’s mindblowing how quickly these kinds of features can be built!) And our early demos were magical: we could pull up our favorite sample dataset, NYC 311 complaint calls, and ask natural language questions like “How much did noise complaints increase in NYC after the Covid lockdown?”

After a few rounds of polish and QA, we were ready for some real user feedback, so we turned it on for some customers.

After a few rounds of polish and QA, we were ready for some real user feedback, so we turned it on for some customers.

What users actually did

Although results from our internal testing were impressive, the real-world results were not quite there. Most users tried the feature out a few times and then never came back.

What happened? Reviewing the data, we found there were four different failure modes happening:

Mistaken identity: Many users expected the AI to behave like a general-purpose assistant, seeking advice on how to use the product instead of looking for answers directly. Questions like “How do I replace 'null' with '0' in this table?” weren’t uncommon. But the feature only generated charts, so users didn’t get the answers they expected.

Overestimating capabilities: Some users asked the assistant to build charts that aren’t actually possible to build in Hashboard, like "Can you color the bars with polka dots?”

Using the wrong data: Our customers usually have many different datasets modeled in Hashboard, and asking users to choose the right dataset before asking a question was a big barrier to overcome. You’re never going to get a good answer about marketing channels if you’re exploring a logistics dataset that doesn’t have any marketing data to begin with.

Inaccurate data filtering: Filtering a dataset is particularly challenging when you don’t have context on the data scheme being used upstream. A classic example: if your dataset encodes location with a zip code, asking "How many widgets did we sell in our Northeast region" will need additional context to work correctly.

Overall, this was a problem about constraints. The AI had particular constraints about what it could and could not do, and those constraints did not line up with what users expected.

Principles for AI Data Features

From our AI beta, we’ve learned a few general principles about these kinds of features.

AI capabilities need clarity: If you’re crafting an AI feature to "use the app for you," it's vital to set clear boundaries. Users often overestimate AI's capacities, leading to frustration.

Tech-savvy users prefer precision: Using natural language to ask questions about data sounds appealing, but when data practitioners have an actual question to answer, they’re (for now) still reaching for high-precision, traditional workflows – as long as they’re efficient and easy to use.

Data selection is key: Features that demand users to pre-select data will not work well. The real challenge lies in identifying the right dataset, columns, and filters.

Users actually just want product help: The most common naturally-emerging use case for a conversational UI was asking for help using the app. To do this well, your product needs good documentation to feed into the model or prompt. This is useful even without AI!

It’s clear from this why LLMs for coding have taken off much quicker than for data analysis. Writing a function is a fairly constrained activity: a good function has well-defined inputs and outputs, and can be easily tested and validated, even if the implementation is complicated. But data exploration is a much more open-ended activity. The AI often will not have enough context to just jump straight to an answer. Until this bridge is gapped, the value will come from helping users use their tools more effectively, not replacing them entirely.

The takeaway: in the short term, data analysts and engineers are going to benefit a lot from well-scoped LLM-powered features like text-to-SQL. But although they are fun to play with and can create some flashy demos, AI features aimed at making data science more accessible to non-experts still have a long way to go before they can truly make a big impact.

What’s Next

We’re still iterating on AI features in Hashboard with these lessons in mind. We’re working on adding more natural language capabilities to our recently launched Data Search feature, which has a more precise interface and works across all the datasets in your project. And most importantly, we’ll continue listening to our users and iterating. AI features are only worth building if they actually get used!